Migrating from Amazon EMR to Databricks? You’re not alone, and you shouldn’t do it alone either.

As organizations lean toward real-time analytics, open data formats, and AI-readiness, more and more teams are re-evaluating their AWS-heavy data stacks, especially Amazon EMR and Redshift. Both have served as reliable cornerstones in the AWS ecosystem. While both have been foundational for modern data architectures, they require too much stitching together to create reliable, end-to-end workflows.

On EMR, stitching together Spark, Hive, and Airflow becomes an operational burden. The data pipelines are fragmented; it requires manual maintenance, and scaling can be a DevOps rabbit hole. Redshift, meanwhile, has its own challenges, such as rigid scaling, batch-first orientation, and proprietary formats that slow down AI/ML adoption.

Databricks, on the other hand, provides a comprehensive and integrated solution for managing data pipelines, with high performance, scalability, security, collaboration, and integration features that make it the best place to run your data pipelines The future is unified and machine learning-ready. Many enterprises are moving to the Databricks Data Intelligence Platform, a unified, open, and scalable environment designed to simplify data and AI workflows. But even the best platforms need the right implementation partners, and that’s where Syren makes a difference.

Why Migrate from EMR

Let’s drill down exactly why migrating from EMR makes sense. The challenges are well-known:

- Managing Spark clusters manually, including versioning and dependency management

- Hive metadata sprawl and fragile schema governance

- Disconnected orchestration via Airflow or custom scripts

- High DevOps overhead for job retries, scaling, and log management

- Slow startup times and idle cluster costs

Why moving to Databricks makes good business (and technical) sense?

Before diving in deeper, here’s a snapshot of why the shift to Databricks makes sense. Databricks offers a modern lakehouse architecture that combines the best of data lakes and warehouses

- Unified Architecture – Say goodbye to fragmented AWS services.

- Built-in Governance & Orchestration – Unity Catalog, jobs orchestration, and RBAC are native.

- AI-Native Design – Optimized for ML & GenAI, right out of the box.

- Photon Engine – A vectorized C++ query engine that runs Spark and SQL 2x–3x faster.

- Faster Time to Value – Simplify your stack, reduce DevOps, and move from idea to deployment faster.

What Does the Migration Involve?

At Syren, we follow a proven 6-step migration framework aligned with Databricks' best practices, with Syren’s proprietary accelerators built in to ensure that your move from EMR is smooth and optimized for performance.

Migration Discovery and Assessment

Understand what you currently have: data sources, pipelines, code, tools, and business needs, and identify any complexities and risks. Syren’s MetaDiscover, our in-house Metadata Discovery & Analysis Accelerator, programmatically scans and maps your entire environment, giving you a clear picture of tables, jobs, workflows, and dependencies.

Architecture and Data Migration

Design the new architecture on Databricks and then move your actual data (e.g., from S3, on-prem, etc.) into the Lakehouse. Syren's InfraBootstrap automates your workspace setup, Unity Catalog onboarding, resource provisioning, project-level components, and CI/CD bootstrapping.

Component Mapping

Match features or capabilities from your old system to equivalent ones in Databricks, for example: How a certain ETL step or function translates into Databricks.

Data Pipeline Migration

Migrate your existing data processing pipelines (ETL jobs, workflows) to run on Databricks. This may involve using Delta Live Tables, Jobs, or Workflows in Databricks.

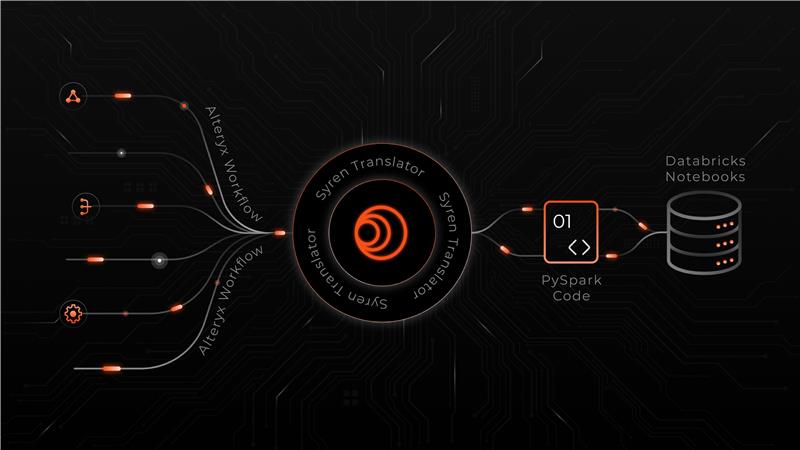

Code Migration

Translate and optimize your existing PySpark, SQL, or Scala code to work on Databricks, which sometimes includes adapting Photon or Delta Lake features. Syren’s CodeRefine Accelerator leverages industry-leading tools like Databricks Assistant, and LLM-powered transpilers for fast, accurate code and pipeline refactoring.

Downstream Tools Integration

Make sure your BI tools (Power BI, Tableau, etc.) and data consumers can still connect and ensure monitoring, alerting, and reporting continue to work.

Syren’s data engineering team has deep experience across Spark, Databricks, AWS, and large-scale orchestration systems. But more importantly, we bring:

- Advanced Accelerators to speed up code and pipeline migration

- AI-driven optimization to help our team deliver better, faster

- Governance blueprints for Unity Catalog, access control, and lineage

- A pilot-first, agile approach to see value in weeks, not quarters

The writing is on the wall: data and AI strategies are converging. A fragmented stack won’t keep up. If implemented well, Databricks offers the scale, performance, and simplicity to drive next-gen analytics. Stressing on the ‘well’, when and if you’re planning an EMR or Redshift migration, let Syren be your co-pilot.

We’ll help you get to the Lakehouse faster and make sure the view is worth it!